Extension Educator - Data Entry

Entering Data - Extension Educator

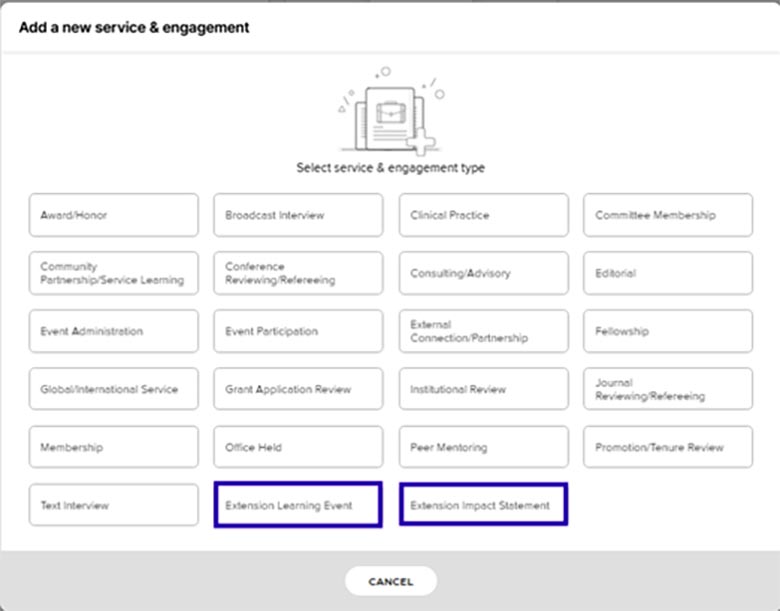

Learning Events & Impact Statements

- There are two “cards” in Service that apply specifically to Extension work.

- NIFA Critical Issues are used as categories to identify all Extension entries. Review the descriptions and examples to determine the critical issue that best fits the Extension Learning Event or Impact Statement.

Demographic Data Collection

- Purdue Extension personnel are to collect demographic information required by the USDA.

- This document provides information and instructions for Extension personnel.

- Here are Purdue Extension’s demographic survey options:

- Paper surveys

- Youth - 1/2 page PDF

- Youth - 1/2 page Spanish (updated May 2022)

- Adult - 1/2 page PDF

- Adult - 1/2 page Spanish (updated May 2022)

- If you would like to use 1/4 page survey templates, please request them (youth and/or adult, English and/or Spanish) here: COAelements@purdue.edu

- Online Survey - Qualtrics Survey File (QSF)

- Youth (under age 18)

- Adult (age 18 and older)

- Please send an email to evaluation@purdue.edu to request the QSF for the youth and/or adult demographic surveys

- Instructions for importing a QSF to your Purdue Qualtrics account

- Paper surveys

- Email evaluation@purdue.edu if you have questions.

If you prefer to “tally” survey responses, here is an Excel spreadsheet that may be helpful.

Educator Reporting Expectations

Expectations From the Director

Schedule for Reporting

- 01 Sept is the deadline for the Educator annual review process.

- Monthly

- By the 5th business day, report monthly:

- Communiqué to the Area Director, and

- LEARNING EVENTS (education programs/workshops delivered to the public in person or via technology) in Elements.

- By the 5th business day, report monthly:

- As appropriate

- When data are in hand to report results of program evaluations, outcomes, and impact, enter IMPACT STATEMENTS (narratives sharing the success of a program) in Elements.

Targeted Set of Metrics in Elements

- The totality of Educators’ efforts is appreciated, but in Elements, we do not try to capture everything Educators do. The approach is to collect a targeted set of metrics focused on key activities.

- The communiqué can be more flexible than Elements, with a narrative for documenting progress and activities, and marketing and other activities as needed for Educators.

- Also, CED administrative tasks will go in the communiqué only, not in Elements.

- Lastly, please remember that OUTPUTS are the first step. We also need to report OUTCOMES and IMPACTS which are the most important information we share.

Monthly Communique or Elements?

- Educators do both each month.

- Use the communiqué for reporting activities toward your goals.

- Go to Elements to enter Learning Events each month, and Impact Statements when appropriate.

Program Planning vs. Metrics

- To compare the Educators' monthly communiqué and Elements, you report progress of your efforts and all that you do in the planning process in the monthly communiqué.

- Then when you implement your program (Learning Event), you report that in Elements.

- See this diagram.

DATA – CONTINUOUS QUALITY IMPROVEMENT

UPDATED APRIL 2025

The purpose of continuous quality improvement for program evaluation and reporting in Elements includes these activities:- Consistent communication, instructions, program evaluation guidance, and Elements tips for data entry,

- Monthly administrative reviews by Leadership for communication to Educators with follow-up, clarification, and corrections,

- Quarterly analysis and comparison of unified evaluation survey and Elements reporting data, and

- Annual audits of Elements data with six Educators.

- The Evaluation and Reporting team communicates with Extension Leadership as new or updated information is available. Area Directors and Program Leaders share information through their communication channels, newsletters, and emails.

- Resources for program evaluation are posted on the Extension Hub.

- Elements resources are posted on the College of Ag’s Elements website, and embedded in Elements on each screen and via help tips (question marks by each item). Instruction documents are linked to more specific information and resources.

- Elements help via ZOOM is made available periodically for drop-in assistance and questions.

- Extension leadership reviews the data entered by their staff to check for regular, consistent, and timely reporting, and for accuracy and completeness of the data. When discrepancies are found, Leadership communicates with Educators to assess the information and work toward improved data reporting.

- The Evaluation and Reporting team completes quarterly reviews of:

- unified program evaluations for complete survey data entry and email uploads if appropriate

- Elements learning events data for accuracy

- The Evaluation and Reporting team compares unified program evaluation data and Elements learning events reporting for consistent and complete data entry.

- The Evaluation and Reporting team emails Educators about potential issues in program evaluation or Elements data entry. If Educators are not responsive, supervisors are copied on emails.

- Six Educators (one from each geographic area) are randomly selected to show how they documented and tracked the data they entered in Elements.

- The audit process is a review of the data entered for Learning Events and Impact Statements.

- Educators will be asked to share their process for gathering data and the documentation that they have for what they entered into Elements.

- In May and June, the Evaluation and Reporting team will communicate with five Educators and their Area Directors and Program Leaders about scheduling this 1-hour appointment as fits into the Educator calendar in upcoming months.

If you have questions about:

- Program evaluation, email evaluation@purdue.edu.

- Reporting in Elements, email COAelements@purdue.edu.