Using artificial intelligence to understand the natural world

For millennia, the development of new tools has been key to major leaps forward in agricultural efficiency and environmental management. But what kind of tool could help us monitor the welfare of cattle, assess the response of rice yields to climate change, make it easier to detect early signs of crop disease from drone data, provide data-driven decision-making tools for productive food systems, or sort the complexities of urban ecosystems?

Purdue Agriculture researchers are harnessing the power of artificial intelligence (AI) and machine learning (ML) to amplify their ability to solve agricultural and environmental problems. With AI, they enable computers to mimic human intelligence. With ML, a branch of AI, they teach computers to learn from experience and detect patterns in large masses of data.

Understanding those patterns helps researchers in the departments of Forestry and Natural Resources, Agronomy and Agricultural and Biological Engineering advance their research and share valuable information with the stakeholders who need it most.Understanding complicated urban ecosystems

Last year a special issue of Frontiers in Ecology and the Environment called out the importance of applying AI and ML to process the massive 3D datasets that digital technology now generates.

Serving as a co-editor of that special issue was Brady Hardiman, associate professor of forestry and natural resources and environmental and ecological engineering. Hardiman routinely uses AI and ML in his work.

“I’m fascinated by cities and motivated to study urban ecosystems because in the US, 80% of us live in a city or an urbanized area,” Hardiman, also a member of Purdue’s Institute for Digital Forestry, says. “If you want to improve lives, that’s where you’re likely to have the biggest impact.”

The contrasts between cities makes studying them complicated. “Cities like Baltimore and Chicago have very different histories, cultures, policies and infrastructure in ways that can make it really difficult to generalize what you know about Chicago to make predictions about Baltimore, let alone Phoenix or Portland,” he says. “It’s all the complexity of the natural world plus all the complexity of the human world layered on top.”

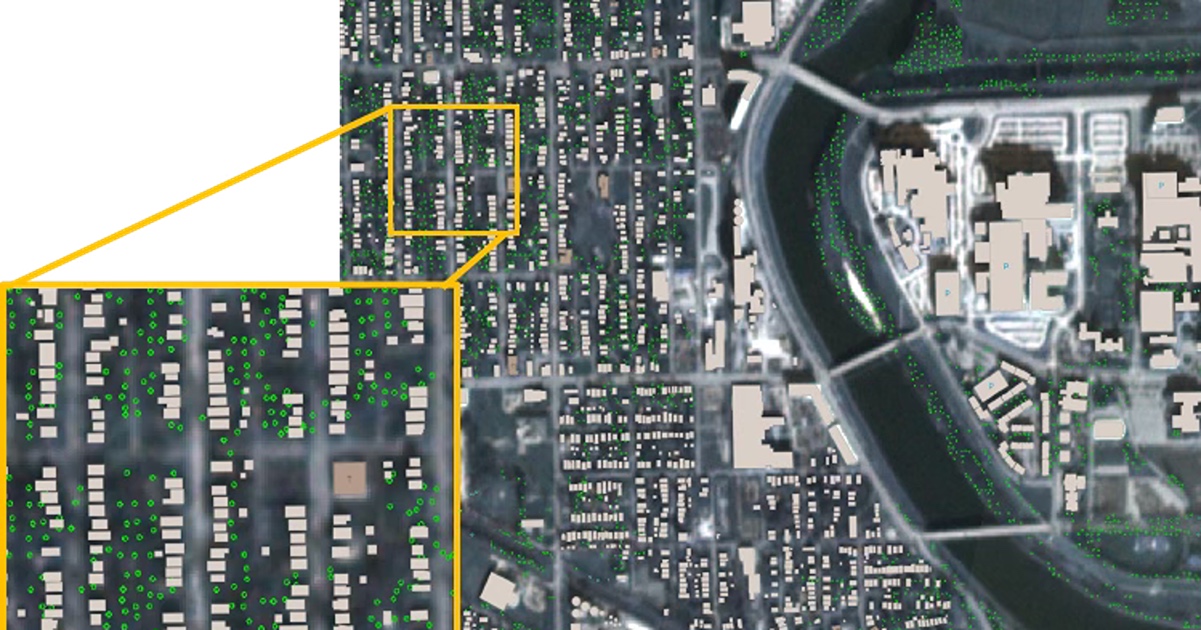

Hardiman’s group analyzes large amounts of remote sensing data and high-resolution imagery covering complex urban landscapes over large spans of space and time. AI and ML help detect patterns, processes and structures that are invisible to the naked eye.

“In urban contexts, we want to know, is a tree a maple or is it an oak? Some species grow better in certain parts of the city than others,” he says. “Putting the right tree in the right place is important in cities, and being able to know what trees are growing on the landscape is an important part of managing urban forests.”

For a project in Chicago, Hardiman’s team tracks the spread of invasive buckthorn shrubs through city forest preserves with aerial light detection and ranging (LiDAR) data.

“We’re using machine learning approaches to analyze the data and identify structural signatures of forests that have been invaded by this invasive species. We can map it across the landscape, which is important for forest managers trying to conserve those forests.”

In rural environments, ML similarly helps the researchers extract features of interest from high-resolution remote sensing data. “We want to know the identity of the trees growing in our forests for timber production, for high value applications. Furniture making or barrel staves from white oaks for the bourbon industry in Kentucky, for example, are a big deal in southern Indiana,” he says.

Medical robots for animals

Animal scientists often need little more than their hands and a syringe to take biological samples from cows. But obtaining highly accurate data for nutrition, sustainability practices and even methane output call for far more precise methods. Upinder Kaur, assistant professor of agricultural and biological engineering, is developing an AI-enhanced medical robot that readily collects this data.

“This robot is the first such medical robot for animals. It can swim inside the stomach of the cow. It can monitor methane, temperature, pH and other biomarkers to give you a much richer detail of how the rumen is working,” Kaur says.

AI helps reduce the computational, power and memory resources required to operate the robot. Its performance is more consistent and lasts longer without recharging the batteries or periodically changing the robot.

The robot can provide better data regarding methane emissions from beef and dairy cattle for greenhouse gas studies. The instrument that measures methane emissions from cattle is expensive and involves a mask that monitors only one cow at a time — and even then, only for 10 or 20 minutes each time.

“You cannot capture this data when they’re moving, lying down, eating and drinking water,” Kaur says. But experiments with her robot have shown that these activities exert a major impact on the daily cycle of methane production.

“It’s not a constant value. Breathing changes with the activity,” Kaur says. The current methane data lack these nuances, which lead to imprecise models that fail to match large-scale observations. Kaur’s team is working to make the robot, now about the size of a cell phone, small enough for a cow to swallow.

The current model is inserted through a cannula, a surgically created port that provides access to the cow’s stomach for sampling studies. Kaur’s robot also contributes to animal welfare by providing highly detailed methane volume data every 10 or 20 seconds for a full day.

If a cow is giving birth, for example, the robot can provide a variety of biomarker data every 10 seconds until delivery. This means that farmhands can act fast if they see a cow’s condition worsening.

“Our goal has always been to deliver solutions that will improve not only the life of these animals, but also to reduce the burdens on farmers,” she says.

Also in development in Kaur’s lab, with colleagues Catherine Hill, professor and head of the Department of Entomology, and Maria Murgia, a research scientist, is a robot “dog” that uses sensors to find hidden ticks and identify hotspots of tick activity. Tracking tick populations is essential for controlling tickborne diseases, and using a robot spares humans this complex task.

Upinder Kaur and Catherine Hill with the tick-sensing robot dog.

Insights into crop yields and climate change

Sajad Jamshidi fished at Parishan Lake as a boy. Years later, he questioned why Parishan, once the largest freshwater lake in Iran, had dried up. He wondered how climate had affected the lake.

“It happened all over the world. This will happen again and again if we don’t find a solution,” says Jamshidi, a postdoctoral scientist in agronomy. “Then I got interested in using statistical analysis to see how climate works, how it affects natural resources.”

Jamshidi now specializes in agriculture, hydrology and geospatial analysis. Working in the laboratory of Diane Wang, associate professor of agronomy, he simulates crops yields under various future climate scenarios. Last March Jamshidi and Wang co-authored a paper in the Proceedings of the National Academy of Sciences that combined 10 machine learning models to assess the effect of public breeding trials on rice yields and which might perform best under future climate conditions.

“Using machine learning to apply genotypic information at a broad spatial scale, integrating genetic traits across diverse environments, represents one of the first attempts in this specific context,” he says.

The researchers initially relied on one model to generate their results. “It wasn’t our intention to use this ensemble approach,” Jamshidi says. But after seeing the results generated by the first model, he decided to try another one. He saw somewhat different results generated by the second model.

“And then I used another one and another one,” he says. In the end, he decided to use the ensemble approach because it could combine the strengths of multiple models to generate more accurate results.

Wang’s team worked with data from the Southern U.S. rice growing region from 1970 to 2015. During that time, public, state-based breeding programs developed more resilient rice varieties, the team found.

The breeders had managed to develop rice varieties more resilient to a warming climate without intending to. Their goals were focused on developing higher-yield rice varieties. But as they tested these varieties, the climate in the rice-growing region of the Southern U.S. became warmer.

“They were testing varieties that were naturally interacting with the warming climate without them knowing,” he says. “It happened naturally, over time, without that specified goal.”

Using the framework that the Purdue team developed, breeders can rapidly test the response of different rice varieties to factors such as climate, temperature and rainfall in a few simulations rather than spending years growing them in the field.

Technological tethers for agriculture

As a child growing up in Kenya, Ankita Raturi, assistant professor of agricultural and biological engineering, received her introduction to AI via video games. By the time her family moved to Papua New Guinea when she was in middle school, Raturi already knew how to code.

“You’re in a small island in the middle of the South Pacific. It’s kind of lonely out there. All of a sudden, the internet appears, and technology has now given you this tether to the universe,” she says.

At Purdue, Raturi develops technologies that help agricultural stakeholders tether to useful data. In one of her projects, she developed a web-based recommender system that she sometimes describes as “Netflix for crops.” Much like Netflix’s interactive menus of programming options, Raturi’s cover crop decision support tools similarly allow users to filter data by factors that include location, soil, weather and goals.

“You’re helping people filter through this glut of data to identify the right crop for the right time in the right place,” she says. That theme recurs throughout all seven projects underway in her Agricultural Informatics Lab.

Netflix for crops doesn’t always require complex algorithms, just good data. With or without AI, her main concern is taking a human-centered approach to building innovative technologies that help food system stakeholders do their work.

Netflix for crops doesn’t always require complex algorithms, just good data. With or without AI, her main concern is taking a human-centered approach to building innovative technologies that help food system stakeholders do their work.

“We use many types of technology to identify appropriate tools for different problems. AI is just one way we can do this,” Raturi says. “I don’t necessarily see AI as a novelty. It’s more the way in which we’re building it. You can build better and faster together in large groups for more diverse use cases.”

One such project, in collaboration with doctoral student Megan Low, uses agent-based models — computer simulations that study entities interacting with each other — to represent foodsheds for data-driven policymaking. Derived from the watershed concept, a foodshed encompasses a region where food flows from production to consumption. They design the foodshed models to allow both policymakers and food coordinators to ask questions that balance food security outcomes with their own needs.

Yet another project stresses regenerative or small-scale, diversified agriculture. “Usually, you increase resilience of agriculture by introducing diversity into food and farming systems to support sustainable ag practices that we know are proven,” she says.

“To make decisions that consider their own needs along with the needs of the people they serve, a policymaker or a farmer needs to be able to weigh the tradeoffs by asking ‘what-if’ questions of the data. There is no such thing as the right decision. It’s just a good decision for this specific area of work.”

Building algorithms for small devices

Somali Chaterji, associate professor of agricultural and biological engineering and electrical and computer engineering, applies ML to build algorithms that use input data to provide outputs that help answer questions in fields including computing, health and agriculture.

For example, ML can help farmers scout their fields for signs of crop diseases more efficiently. In digital agriculture, detecting rare or emerging disease outbreaks is critical to prevent small problems from becoming widespread outbreaks. Chaterji’s recent work on semi-supervised semantic segmentation — a term that refers to models trained on both labeled and unlabeled data —achieves its largest gains when only a small fraction of samples is labeled, making it ideal for those hard-to-capture outbreaks.

Labeled data provides the context that guides the model’s learning (for example, images tagged “blight” or “rust”), while unlabeled data forces the model to discover patterns on its own. Chaterji’s models start with a handful of expert-annotated leaf images, then automatically “fill in the blanks” by tagging other photos the algorithm is very confident about. This expands the training set without extra human effort and maintains top-tier accuracy even when initial labels are sparse or uneven.

This capability has direct applications in networks of ground sensors and drone fleets for continuous agricultural surveillance. Low-cost cameras capture vast swaths of cropland, the model flags suspicious patches in real time, and farmers can target treatments precisely — saving time, reducing chemical use and boosting yields.

“Typically, you don’t have copious amounts of labeled data, especially when you’re going into uncharted territory,” Chaterji says. “Evaluation against standard baselines — benchmark algorithms run on the same small, labeled fractions — shows our lead only widens as labels become scarcer. Thus, we outperform algorithms using fewer labels. And as we tap into more unlabeled data, our advantage grows.”

Algorithms for assessing crop diseases work best when fed multiple datasets from fields that assign specific diseases to certain percentages of the crops based on leaf discoloration.

Some of the latest work in Chaterji’s Innovatory for Cells and Neural Machines (ICAN) lab is the recently developed Agile3D, a LiDAR-based perception algorithm for resource-constrained platforms such as drones, self-driving cars and autonomous tractors, so that everyday devices can run sophisticated models without draining batteries or relying on constant connectivity.

A recurrent theme across her projects is resource-aware training and inference. The methods are designed to use as few computing resources as possible, so they can run directly on small devices or sensors in the field, without needing expensive hardware or constant network connections to the cloud. ICAN has recently also incorporated training to make the algorithms resilient to noise — random or irrelevant variations in the data — whether caused by natural factors like weather or by malicious tampering. Both of these explorations are funded by Chaterji’s prestigious National Science Foundation CAREER award, and she regularly presents her research at top conferences for computer vision and mobile systems.

“As the world generates more data in various forms — be it video, audio, LiDAR, even multispectral images — it becomes increasingly important to process this data close to where it is generated. My work on ML execution on small devices is geared toward achieving this, rightsizing algorithms to their host platforms,” Chaterji says. “This enables real-time decisions, cuts energy use and broadens access to AI.”

This research is a part of Purdue’s presidential One Health initiative, which involves research at the intersection of human, animal and plant health and well-being.